Face authentication is only as strong as its liveness checks. This piece gives a compact taxonomy of physical presentation attacks (PAs) and a practical coverage plan suitable for production systems. Terms align with ISO/IEC 30107-3; the taxonomy reflects Axon Labs’ field experience (with a review of NIST’s 2023 Face Analysis Technology Evaluation)

Quick definitions

- Presentation Attack (PA): Spoofing face recognition with a physical artifact presented to the camera

- Presentation Attack Instrument (PAI): The artifact itself (print, screen, mask)

- Liveness / PAD: Techniques to detect PAs during verification

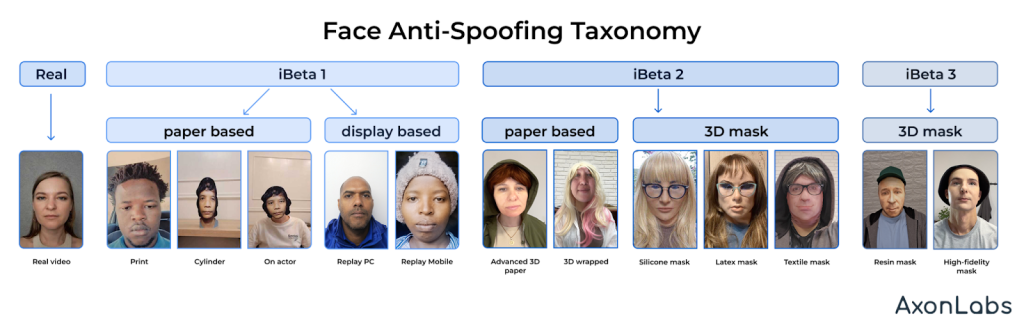

Core physical classes (Axon Labs taxonomy)

- A) Printed (flat, cutouts, cylindrical wraps, 3D paper)

Examples: eye/mouth cutouts, cylindrical wraps, 3D paper heads.

Minimum tests: ≥5 unique prints/materials (matte/glossy), multiple printers, varied distance/angles, different lighting conditions - B) Screen Replay

Examples: photos/videos replayed on phone, tablet, laptop, monitor. Minimum tests: ≥4 screen types × 3 brightness levels; front camera vs external webcam; variable frame rates. Example: Replay Attacks Dataset by Axon Labs

C) Photo-on-Actor (flat or wrapped)

Examples: flat photo worn on face, attributes printed in the photo (glasses/hood), 3D-wrapped photo on actor

Signals & risks: seam/edge discontinuities, depth mismatch, fixed expression

Minimum tests: ≥5 actors, 2–3 fixation methods, with/without real accessories

- D) 3D Masks

Subclasses: resin, latex, silicone, hyper-real (indoor/outdoor). Minimum tests: ≥2 manufacturers per subclass; with wigs/glasses/beards; turning sequences. Example: iBeta Level 2 Dataset

- E) Textile/Fabric Masks

Examples: fabric masks/balaclavas with printed faces; hood + glasses combinations. Minimum tests: ≥3 fabric types (density/print), indoor/outdoor, different motion levels

Image: Axon Labs physical face-attack taxonomy — printed, cutout, cylindrical, replay, photo-on-actor, resin/latex/silicone masks, textile masks

Coverage checklist (“production-ready” minimum)

- Attack videos: ≥1,000 in total; for robustness, target ≥1,000 per PAI category

- Actors: >100 unique participants

- Devices: ≥3 cameras (two phones + one laptop/external webcam)

- Lighting: bright / dim / strong backlight; indoor & outdoor

- Distance & pose: 30–80 cm; yaw/pitch/roll; with & without glasses/facial hair/hood

- Mandatory metadata: PAI type & subclass, material/brand, printer/screen model & settings, capture device/lens, distance, lighting, operator, date/series, outcome (success/fail)

Metrics & reporting

Report APCER, BPCER, ACER, EER for each class and device. Map procedures to ISO/IEC 30107-3 and disclose unknown-PAI performance. (Useful references: ISO/IEC 30107-3, FIDO Alliance biometric requirements, and iBeta PAD testing)

iBeta PAD: scope and limits

iBeta PAD certification (aligned to ISO/IEC 30107-3 terminology) demonstrates that, at a defined operating point, a system resists a specified set of physical PAs performed by trained operators under controlled conditions. This is valuable, but not a blanket guarantee for unknown PAIs, new mask materials, different cameras/lighting, or non-physical threats (e.g., injection, deepfakes). Treat it as a baseline: reproduce the lab procedure on target devices, then extend with the Coverage Checklist; report per-class APCER alongside overall ACER; confirm the threshold on a held-out set; log latency/throughput on production hardware. Common prep errors: replay vs print confusion, low variability, no clean genuine baselines